Can large language models reason?

In a previous post, I speculated that large language models (LLMs) have proto-understanding. Briefly put, the reasoning behind that is that they contain a network of symbols that roughly corresponds to a network of referents in the real world, but remains distinct from it – LLMs are after all not causally connected to the real world. The correspondence between the two networks is rough, because the symbolic one was generated with language as an intermediary, imperfect representation of the structure of the world. That language after all was generated by human agents who were causally connected to the network of referents, but who filtered it based on their own peculiar interests, biases and limitations.

Because of the disconnect between the world and the LLMs, the latter re not capable of understanding in the grounded sense of the word, but they might be close. Hence the notion of proto-understanding.

As ChatGPT illustrates, the ungrounded network that constitutes an LLM is already good enough for certain tasks. Bing shows that coupling LLMs to internet search even allows for 'search and summarize' tasks. Whether you call it stochastic parroting, juggling symbols or interpolation, the processes through which LLMs generate utterances can be put to use.

But can LLMs reason? Reasoning surely requires understanding, but how much? Is proto-understanding good enough? How would we know?

ChatGPT seems to not be great at reasoning at the time of this writing. If you ask it to write a paper in which it takes a substantiated position, it fails to really argue a case. Now if ChatGPT is conversationally prompted in a type of Socratic dialogue, it displays reasoning behaviour (Cox, 2023), but I don't find that particularly convincing. To me that seems like a human doing the reasoning and the chatbot tagging along the thought process. That the resulting conversation looks like a classroom discussion should make you think about education, not about LLM potential.

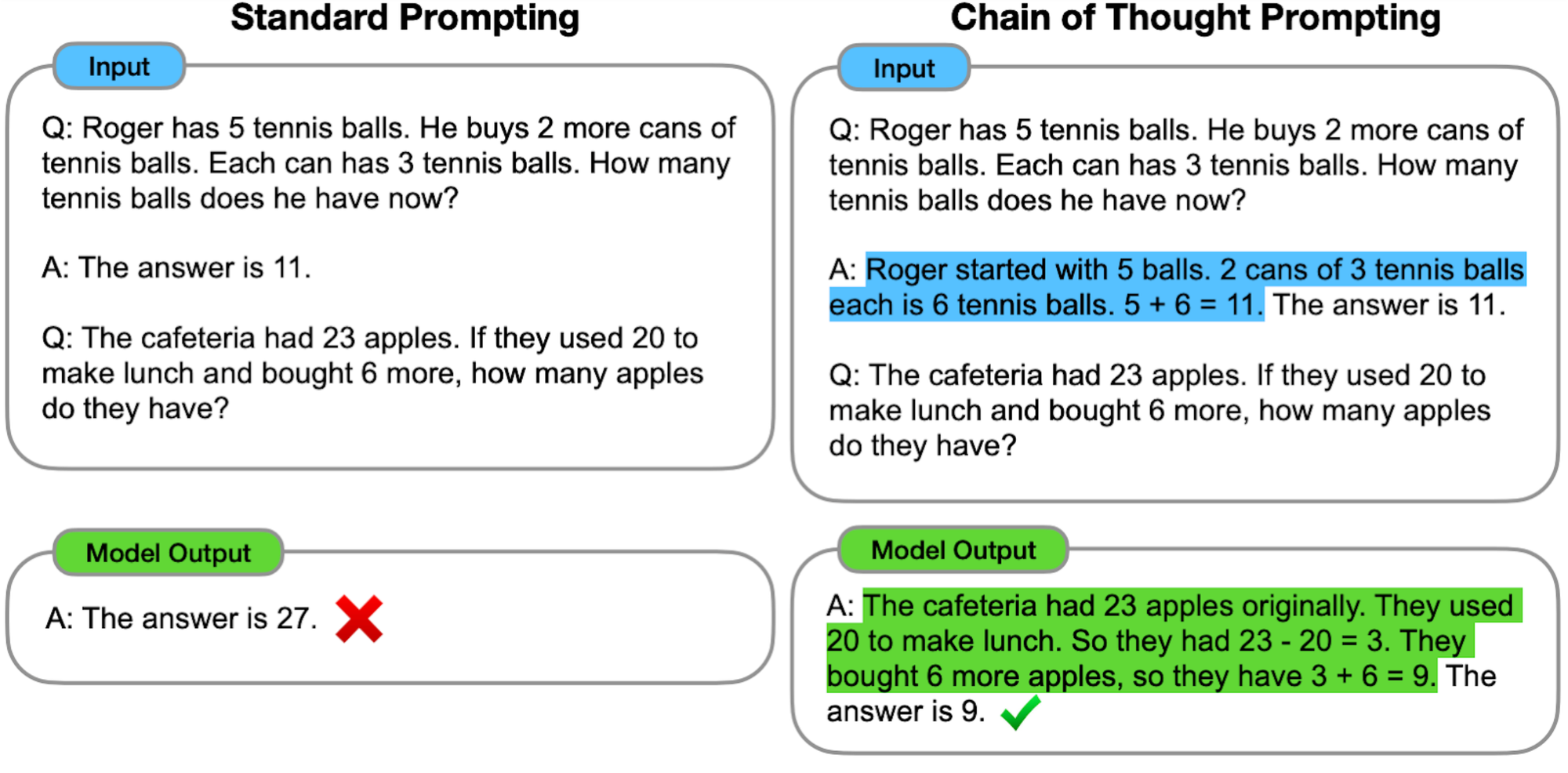

There have been efforts to probe the reasoning prowess of LLMs. It turns out you can improve their performance on reasoning problems by nudging them a bit as part of the prompt. In an approach called chain-of-thought-prompting, you give an example to the LLM before asking it to solve a similar problem and this boosts its performance (Wei et al., 2022). Interestingly enough, scaling the LLM currently boosts performance in response to chain-of-thought-prompting (at least for arithmetic problems), but not in response to standard prompting.

In so-called common-sense reasoning tasks – in which reasoning requires integrating logic with background knowledge – chain-of-thought-prompting could not be shown to provide systematic benefits. This might be because LLMs struggle with a key feature of reasoning, which is breaking down a problem into subproblems that can be handled better (Mialon et al., 2023). If this analytical portion is done for the LLMs, as was done in the Socratic dialogue experiment, they perform much better.

The result is oddly similar to talking a human through a problem, but the underlying mechanics may be wholly different. It could very well be that instead of using the logic of intermediate reasoning steps, LLMs rather use them to get more context to zone in on correct output (Yu et el., 2022). Indeed, attempts to train LLMs to infer logical structure suggest that the machines struggle to generalize outside of their training corpus. Instead of learning how to reason, they learn what the statistical features of reasoning problems are (Zhang et al., 2022).

Chain-of-thought prompting and coaching the LLMs through reasoning steps are not the only crutches you can give to LLMs. Providing them with a visual modality improves performance on reasoning tasks more than a corresponding scaling increase of the network would (Zhang et al., 2023). The fact that ChatGPT4 is expected to run on a multimodal LLM could therefore herald a new panic among educators. Similarly, innovations such as embodied multimodal LLMs (or: language models inside vision-capable robots) may boost the above types of reasoning by machines. In addition, research will probably yield more tricks that help LLMs with their apparent reasoning.

But is this apparent reasoning similar to what we expect from students? There is a difference between what researchers in the aforementioned LLM experiments call reasoning and what cognitive scientists usually mean by the term. The researchers focused on the ability to break down a task into manageable subtasks. For example, Mialon et al. (2023) state that:

"Reasoning is decomposing a potentially complex task into simpler subtasks the LM can solve more easily by itself or using tools. There exist various ways to decompose into subtasks, such as recursion or iteration. In that sense, reasoning is akin to planning."

This is a view in which reasoning is a specific analytical strategy towards problem-solving and this view is much more narrow than reasoning in the cognitive science literature. There, reasoning is a process of identifying and organizing those features from the world that can work as reasons for explaining or justifying beliefs or actions. Such reasoning may involve analytical thinking and it may be done to solve a problem, but it is more than decomposition alone. A reasoner might for example employ counterfactuals or propose causal relations to justify a specific belief.

Do the relations stored within the LLMs provide a basis to engage in such broader forms of reasoning, too? There have been attempts to demonstrate this, but these only suggested that with sizable world knowledge, LLMs are capable to use low-level text features to handle counterfactuals (Li et al., 2022). They do not do so by identifying the relevant causal factors in their world model. Still, using low-level text features allows for apparent counterfactual reasoning. Some personal experiments with Bing Chat (which has a flexible, large knowledge base) revealed that the results can be fine-grained, indeed.

----

That's another interesting hypothetical question. In reality, humans eat fish for various reasons, such as:

- Fish is among the healthiest foods on the planet. It’s loaded with important nutrients, such as protein and vitamin D. Fish is also a great source of omega-3 fatty acids, which are incredibly important for your body and brain¹.

- Eating fish fights heart disease in several ways. The omega-3 fats in fish protect the heart against the development of erratic and potentially deadly cardiac rhythm disturbances. They also lower blood pressure and heart rate, improve blood vessel function, and may ease inflammation³.

- Eating fish may also help to prevent or delay mental decline in older adults. People who eat fish regularly also have more gray matter in the brain centers that control memory and emotion¹.

- Some people choose to eat fish instead of meat for ethical reasons. They may believe that fish are less sentient than mammals or birds, or that fishing is less harmful than farming⁵.

However, if all fish were carnivores, humans might still consume fish depending on their preferences and availability. Some possible scenarios are:

- Humans might eat fewer fish because they would be harder to catch or farm. Carnivorous fish are usually more active and aggressive than herbivorous or omnivorous ones. They might also require more food and space to grow.

- Humans might eat more fish because they would be more nutritious or tasty. Carnivorous fish tend to have higher levels of protein and omega-3 fatty acids than herbivorous or omnivorous ones. They might also have more flavor or texture due to their diet.

- Humans might eat different kinds of fish because they would have different impacts on the environment or health. Carnivorous fish are usually higher up in the food chain than herbivorous or omnivorous ones. They might accumulate more toxins or pollutants from their prey. They might also affect the balance of marine ecosystems by reducing their prey populations.

----

One can see how these answers can be generated on the basis of lexical factors in search results, but these are certainly causal explanations. If there is such a thing as mindless reasoning, this might be it.

What to make of this? The step-by-step reasoning that you see in physics problems or mathematics has a linguistic form that can be mimicked by LLMs, but they currently fail often when creating the steps themselves and instead need a human coach to guide them through the reasoning process. For reasoning in the broader sense, LLMs can generate text that picks up on counterfactuals or identifies relevant causal factors, but systematic probing reveals this is based on low-level textual features rather than the mental model with causal links that humans are thought to use. Taken together, I'd say that LLM behaviour does not indicate they can pinpoint what reasons are and therefore they do not reason.

Still, their apparent reasoning behaviour can still be useful. As shown in the green box above, even the low-level textual factor used by LLMs can point towards causal identifiers, which can then prompt actual reasoning in a human. I am not saying LLMs will prove reliable for such a role – especially if we do not know the contents of their corpora – but in principle they might spotlight blind spots on the part of human reasoners, and be a thinking tool that way.

References

Cox Jr, L. A. (2023). Causal reasoning about epidemiological associations in conversational AI. Global Epidemiology, 100102.

Li, J., Yu, L., & Ettinger, A. (2022). Counterfactual reasoning: Do language models need world knowledge for causal understanding?. arXiv preprint arXiv:2212.03278.

Mialon, G., Dessì, R., Lomeli, M., Nalmpantis, C., Pasunuru, R., Raileanu, R., ... & Scialom, T. (2023). Augmented Language Models: a Survey. arXiv preprint arXiv:2302.07842.

Wei, J., Wang, X., Schuurmans, D., Bosma, M., Chi, E., Le, Q., & Zhou, D. (2022). Chain of thought prompting elicits reasoning in large language models. arXiv preprint arXiv:2201.11903.

Yu, P., Wang, T., Golovneva, O., Alkhamissy, B., Ghosh, G., Diab, M., & Celikyilmaz, A. (2022). ALERT: Adapting Language Models to Reasoning Tasks. arXiv preprint arXiv:2212.08286.

Zhang, H., Li, L. H., Meng, T., Chang, K. W., & Broeck, G. V. D. (2022). On the paradox of learning to reason from data. arXiv preprint arXiv:2205.11502.

Zhang, Z., Zhang, A., Li, M., Zhao, H., Karypis, G., & Smola, A. (2023). Multimodal chain-of-thought reasoning in language models. arXiv preprint arXiv:2302.00923.

Member discussion